This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

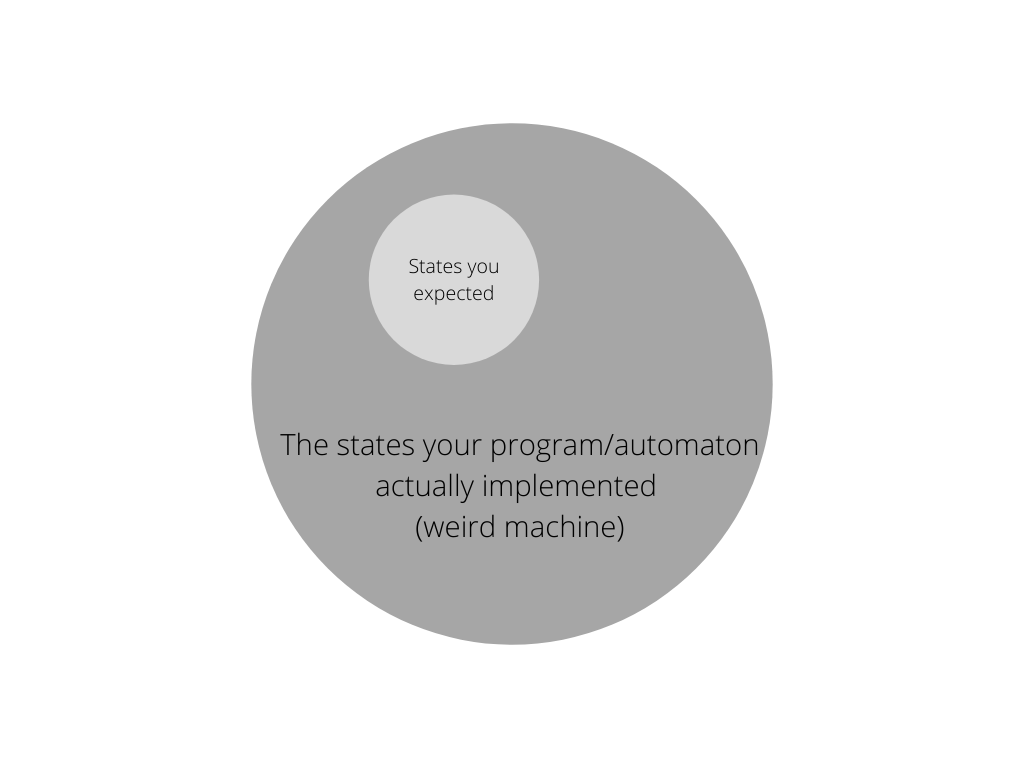

More or less, there are almost certainly some states and transitions that "indeed exist but the developers were unaware of them (hence the weird machine)" - in every single codebase.

What hackers do is to simply reveal unexpected state transitions and somehow find a way to force the targeting program/automaton running into such an unexpected state through unexpected (from the programmer's perspective) state transitions, a.k.a weird machine oriented programming, which is triggered by a crafted data flow.

The only way for developers to write secure code is to precisely match their expectations for the state machine they are actually implementing, either expanding their expectations or reducing the "weird part" in their program.

The key here is whether or not there is unexpected and exploitable behavior in the weird part of the target programs, which could be triggered by tainted dataflows.

"The system's security is defined by what computations can and cannot occur in it under all possible inputs"[2] after all, cyber security research, especially vulnerability exploitation, is all about trying to figure out how things really work under all possible(and impossible :) circumstances, both expectedly and unexpectedly. That is, exploring the vast unexpectedness.

Just my humble opinion, feel free to correct me.

References:

[1] http://langsec.org/papers/Bratus.pdf